Porting DSPy Optimizers — While Remembering the Bigger Picture

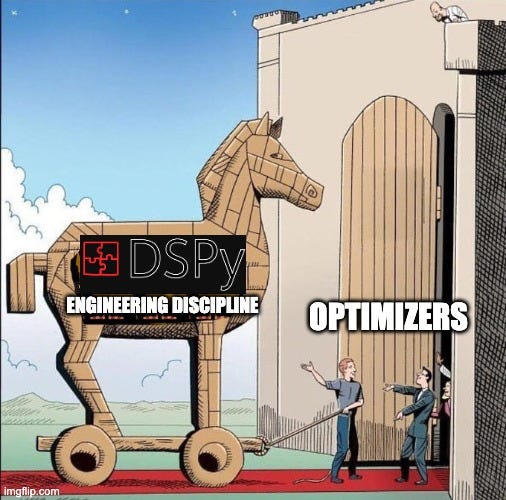

Or, How DSPy's Optimizers are the Trojan Horse of Engineering Discipline in LLM Engineering

A few days ago, I mentioned on X that if 300 people wanted to see DSPy optimizers integrated with other frameworks (like LangChain or Crew AI), I'd build it publicly. While I didn't get the 300 likes I was looking for, we received attention from several deeply interested developers who saw the potential value immediately. This kind of focused interest from the right people matters more than raw numbers, and it reinforces my belief in what I'm writing here. People are excited about DSPy's advanced optimization flows—and yes, optimizers are a big draw.

However, I want to emphasize something: DSPy is not just about optimizers.

There's a whole philosophy here about how to build "compound AI systems" in a way that's future-proof, evaluation-driven, and not locked in to one model. If you only think of DSPy as a library for fancy prompt/RL optimization, you're missing a large part of the story.

The Philosophy Behind DSPy

Omar famously laid out 5 “core bets” that guide DSPy's design:

1. Information Flow is the main bottleneck.

As foundation models improve, the key becomes (a) asking the right question, and (b) providing all necessary context. DSPy addresses this with flexible control flow (“compound AI systems”) and Signatures for structured I/O.

Why I agree: Too often, we get distracted by “prompts” themselves. But what truly matters is whether each part of the LLM pipeline has the right data or context at the right time. DSPy's approach to laying out how data flows between modules is crucial for robust systems.

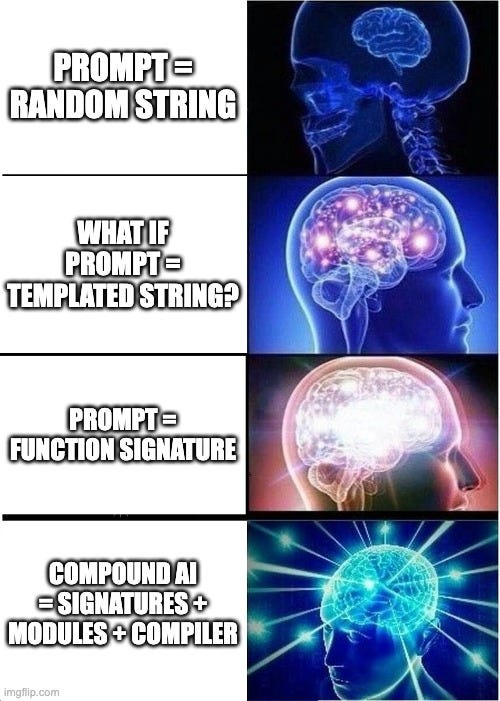

2. Interactions with LLMs should be Functional and Structured.

Rather than treat everything as chat messages or big text prompts, we should define them as functions with well-defined inputs, outputs, and instructions.

Why I agree: If you think “prompt = a random string”, you end up with a constant string-tweak headache. But if you see “prompt = function signature with inputs/outputs”, it's a lot cleaner—and more amenable to optimization.

3. Inference Strategies should be Polymorphic Modules.

Techniques like CoT, ReAct, ToT, or other inference patterns should be easily swappable, just like layers in PyTorch. Each Module is generic and can apply to any DSPy Signature.

Why I agree: We want to re-use these patterns across tasks, not re-implement them from scratch. It's the difference between a well-architected library and a tangle of scripts.

4. Separate the specification of AI software behavior from learning paradigms.

Whether you do fine-tuning, RL, or iterative prompt optimization, your base definitions (Signatures and Modules) shouldn't need rewriting.

Why I agree: Historically, every time we switched from LSTMs to Transformers or from BERT to GPT-3, everything broke. DSPy wants to solve that by letting us keep the same "spec" of what the system is supposed to do, while the "how" (or the training approach) can evolve over time.

5. Natural Language Optimization is powerful.

Combining fine-tuning with high-level language instructions can be extremely sample-efficient and more intuitive than purely numeric RL.

Why I agree: We've seen in practice how "prompt-level" interventions, guided by clear instructions and examples, can drastically reduce the trial-and-error cycle. This has been proven by frameworks like MIPROv2, SIMBA, and other research that merges text-based feedback with gradient-based methods.

In a sense, DSPy has a "compiler" for declarative compound AI systems. You specify Signatures and Modules, and DSPy translates that into the best possible LLM behavior.

Why Decouple the Optimizers?

There are two perspectives on DSPy adoption that I wrestle with:

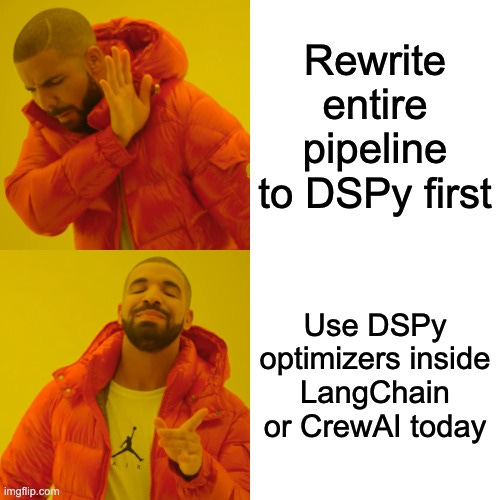

From a research perspective, the ideal approach is to build everything in DSPy from scratch. You define your entire compound AI system in a structured, type-safe way, and let the DSPy compiler and optimizers do their job. This provides the cleanest implementation, maximum synergy between components, and the full power of DSPy's abstractions.

From a practical perspective, we must acknowledge real-world constraints. Many developers have entire pipelines in frameworks like LangChain, Crew AI, or custom solutions. They might not have the time or resources to rewrite everything around DSPy's abstractions—yet they'd still love to tap into our optimizers. So it makes sense to decouple those optimizers for broader use:

It can help more people get immediate improvements without a total workflow rewrite.

It addresses real-world distribution challenges: we want DSPy's ideas to spread widely.

As more devs apply these optimizers, we can gather more datasets and benchmark results, which improves optimization research and DSPy's evolution itself.

While decoupled optimizers might not achieve their highest possible accuracy without DSPy's discrete abstractions, they can still provide significant value when integrated with other frameworks.

This “distribution first, full adoption second” approach maximizes usage, fosters community contributions, and hopefully nudges teams toward the full DSPy abstractions over time.

A broader user base means more real-world data, more unique tasks, and more varied benchmarks. All of that feeds back into optimization research efforts, letting us refine algorithms and evaluate them across a wider spectrum of use cases.

The Power of Compound AI Abstraction

DSPy stands on the belief: defining your “compound AI systems” with explicit Signatures is key. By specifying modules' inputs and outputs clearly, you do more than just produce a “better prompt”. You define the entire structure of data flow across your AI pipeline.

Meeting Developers Where They Are

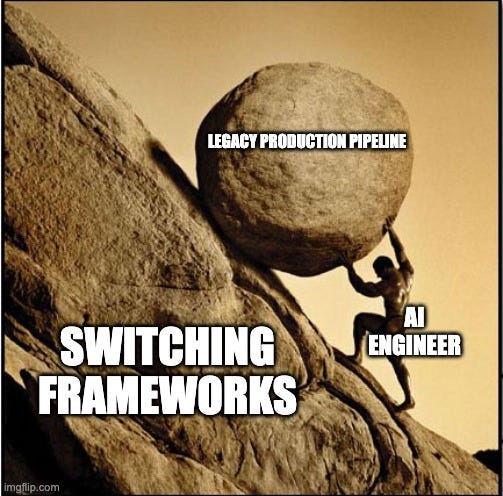

Different projects might rely on different frameworks (or no framework at all), each with unique assumptions. Some agent-based platforms don't expose the entire conversation to the dev. GUI-driven frameworks might store state in ways that don't map cleanly to DSPy Signatures. Others might rely on specialized data flows you can't trivially replicate.

That's why forcing everyone to use DSPy from the ground up can be a deal-breaker. But if they can use DSPy's optimizers from within their existing environment, they get a taste of the benefits. Hopefully, they'll then consider switching more parts of their code to DSPy's structured approach.

The Data-Driven Imperative

A big part of DSPy's approach is focusing on evaluations. If you want an optimizer, you need an objective function or at least a robust metric. Many LLM devs skip systematic evals, relying on quick “does this prompt work?” checks. At a certain scale, it becomes unsustainable to maintain.

People like Eugene Yan, Hamel Husain, Shreya Shankar, and Jason Liu all emphasize and (explicitly) educate folks to look at data, analyze errors, use evals, and iterate based on measurable outcomes.

They point out how crucial it is to measure each iteration’s success or failure. Doing it manually is tedious, which is why DSPy aims to make “Signatures + Evaluations + Optimizers” a cohesive process from the start.

There's an additional principle that beautifully complements Omar's bets: the reinforcement of software and ML engineering fundamentals. When LLMs first emerged, many developers (understandably) set aside these fundamentals in the excitement of "prompt engineering." We often bypassed clear problem definitions, success metrics, and test datasets. Instead, we relied on subjective judgments: "this prompt feels better than that one."

Now we're coming full circle. Just as traditional ML requires well-defined problems and loss functions, effective LLM development demands:

1. Clear problem definitions (what exactly are we trying to solve?)

2. Measurable success criteria (how do we know if we're improving?)

3. Test datasets (what examples validate our approach?)

What's powerful about DSPy is how it implicitly educates developers by making these principles part of its workflow. By requiring Signatures and encouraging evaluation functions, DSPy naturally guides developers toward better practices.

But here's the key insight: porting optimizers is about distributing these foundational principles to everyone. Instead of just telling people “structured AI development is good for you”, we're saying “take this optimizer and you'll see immediate improvements—but you'll need to create datasets and evaluation metrics first.” It's a Trojan horse for better engineering practices.

A broader user base means more real-world data, more unique tasks, and more varied benchmarks. All of that feeds back into optimization research efforts, letting us refine algorithms and evaluate them across a wider spectrum of use cases.

Common Hurdles (and my Perspective)

Porting Production Systems: If your AI pipeline is in production, switching frameworks is tough. That's why bridging DSPy optimizers is valuable—you can start using them immediately, no major rewrite.

Future-Proofing: People worry about picking the “wrong” abstraction. But DSPy is designed to evolve as LLMs evolve, so you're not forced to keep rewriting all your prompts when new models appear.

Overhead of Structured Signatures: Defining inputs/outputs might feel like extra work. But it pays off when you run repeated optimization cycles—everything is cleaner, more robust, and easier to track.

Abstraction Diversity: While we should push on defining the best abstraction for people in DSPy too, there may be use cases where developers lean toward different abstractions. The key insight is that regardless of the chosen abstraction, Natural Language Optimization remains a powerful paradigm of learning that can benefit all approaches.

The Vision: From Optimizers to Ecosystem

Ultimately, the dream is that DSPy's structured approach in thinking becomes a standard for building and thinking about compound AI systems. Opening up the optimizers is a practical step toward that vision:

Immediate Gains: Devs see better performance, even if they only adopt a fraction of DSPy.

Broader Usage → More Data: As more people use DSPy optimizers, they generate valuable datasets and benchmarks. These datasets open up avenues for people to contribute and share their insights, directly feeding into future optimization research.

Full DSPy Adoption: Over time, teams realize that adding Signatures and Modules (i.e., truly structuring their AI pipelines) unlocks even greater synergy and maintainability.

Building in Public & Join the Beta

I'm committed to developing these integrations in the open—sharing code, design decisions, and prototypes publicly. This isn't just about transparency; it's about creating better tools through community feedback and real-world testing.

Our priorities for this effort are:

Developing in the open: All code, design decisions, and integration approaches will be publicly available.

Listening to feedback: Your real-world use cases and challenges will directly shape these integrations.

Emphasizing data/evals: We'll help you define metrics and gather evaluation data as you implement these optimizers.

I'd love to talk with you directly about using these integrations. If you're interested in being part of this, we opened up a Slack server to chat. Join us!

In Summary

DSPy goes beyond optimizers—it's a holistic, evaluation-driven compiler for compound AI systems that future-proofs your LLM workflow with a philosophy-first design centered on structured Signatures, evaluation-centric loops, and modular inference; the optimizers are just one expression of that mindset.

Quick wins, long-term discipline—decoupled optimizers provide instant gains while acting as a Trojan horse for good engineering: you must gather datasets, define metrics, and measure progress.

More users → more data → better research—every new integration expands the pool of real-world tasks, fueling benchmark creation and faster innovation in optimization algorithms.

Abstraction-agnostic power—whatever framework you favor (DSPy, LangChain, CrewAI, or something custom), Natural Language Optimization remains a portable paradigm that DSPy makes easy to adopt.

Start with the optimizers, stay for the structure. Plug them into your stack today, collect data & evaluations, and you'll already be on the path toward the full DSPy approach—where Signatures, Modules, and evaluation-first design unlock deeper, longer-term returns.

I'd love to talk with you directly about using these integrations. If you're interested in being part of this, we opened up a Slack server to chat. Join us!